This blog series is split into 3 parts –

Part 1: Why Binary Neural Networks

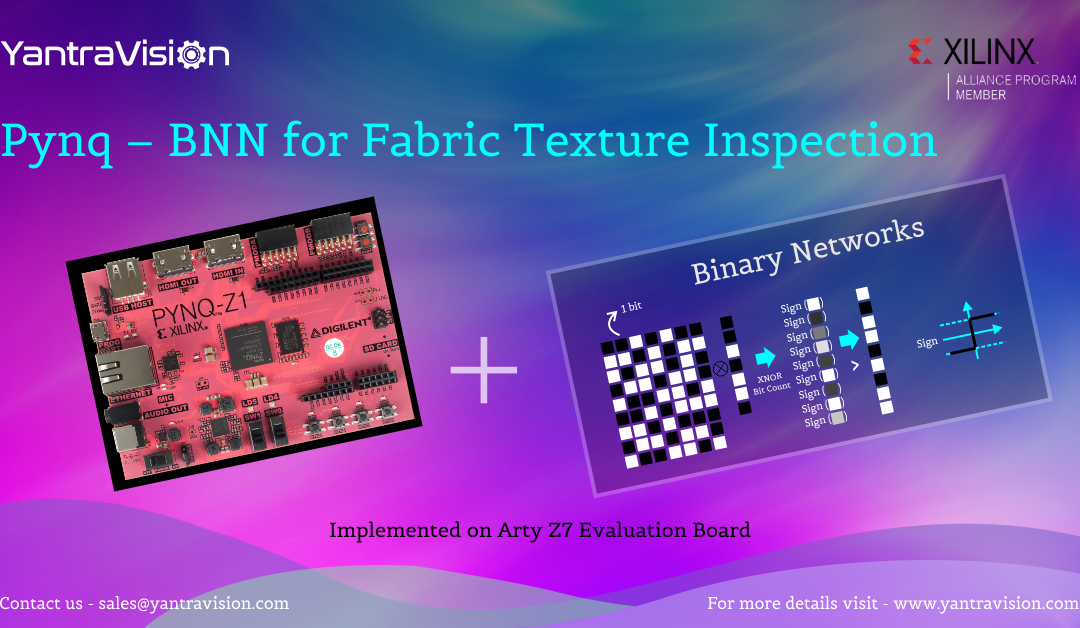

Part 2: Why PYNQ?

Part 3: Pynq – BNN Implementation

Introduction

We develop High Speed Embedded Vision modules for use cases in the Textile Industries. These high-speed modules are built using our proprietary Image Signal Processors (ISP) that run on Zynq platform. One of the challenges that we face is to differentiate colour and texture of objects from the background. We use deep learning for such use cases and as they are hard real time requirements, we implement them on embedded FPGA processors. In this context we continuously explore methods (like DPU and Pynq – BNN) to implement deep learning on embedded FPGA. This blog post is about our experience in implementing such applications using Pynq – BNN.

Why Binary Neural Networks?

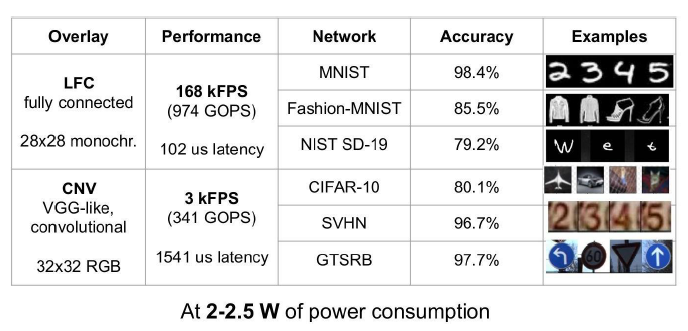

As we know, many of the Artificial Intelligence use cases like image classification and speech recognition are required to be performed on the EDGE. Although DNNs are equipped to handle such applications, relatively bigger networks with more parameters than before call for more resources (processing power, memory, battery time, etc.). These resources are sometimes critically constrained in applications running on embedded devices.

Traditionally, DNNs used floating point values – mostly 32-bit. It was thought to be needed for accuracy and worked well since CPUs and GPUs could handle floating point operations efficiently. However, for EDGE applications with lesser resources the focus has been on training networks whose weights can be transformed into some quantized representations with a minimal loss of performance.

Quantization is the process of compressing the floating point input/output values in a DNN to a fixed point representation. Typically, these are 8-bit or 16-bit integers.

Even after quantizing floating point to fixed point values these networks still need to employ arithmetic operations, such as multiplication and addition, on fixed-point values. Even though faster than floating point, they still require relatively complex logic and can consume a lot of power.

We focus on solving these challenges by using a Binary Neural Network (BNN). BNN is suitable for resource constrained environments, since it replaces either floating or fixed-point arithmetic with significantly more efficient bitwise operations. Hence, the BNN requires far less spatial complexity, less memory bandwidth, and less power consumption.

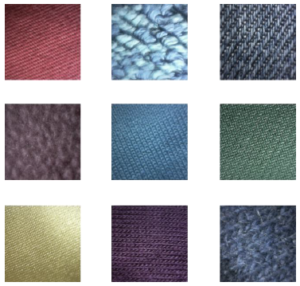

For this project we have trained BNN using fabric texture dataset that contained 27 classes from a sample of 2000 images. We have augmented the training data with 4 different illumination and angle settings.

For purpose of demonstration we have chosen 5 classes and packed it into a dataset as per cifar10 format.