Recap: Deep Learning on Xilinx Edge AI (DPU Implementation) – Part 1

Introduction

In the course of developing Edge AI solutions, it is imperative to evaluate the solution on a standard Xilinx Evaluation board to see if your model fits on the silicon and you are able to achieve required performance like latency and accuracy. We have attempted to make this process of evaluating Deep Learning models on ZCU106 easy by developing a Targeted Reference Design (TRD). For more details on YantraVision TRD for DPU and if you want to use our TRD, write to us on sales@yantravision.com

TRD Design for EDGE AI on ZCU 106

Deep Learning Processor Unit (DPU): The Xilinx Deep Learning Processor Unit (DPU) is a programmable engine dedicated for convolutional neural network. There is a specialized instruction set for DPU, which enables DPU to work efficiently for many convolutional neural networks.

Tool Chains Used

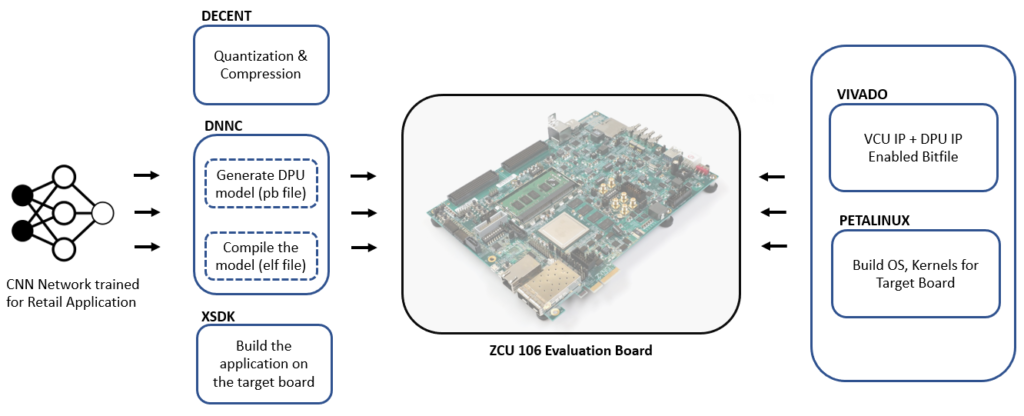

- The Deep Learning Neural Network Kit (DNNDK) – v3.1 is used to implement models on the Edge AI platform.

- Deep Compression Tool (DECENT): This tool helps in pruning by 5x to 50x and quantizing the models to INT16 or INT8. This process improves performance, efficiency and latency without compromising on accuracy.

- Deep Neural Network Compiler (DNNC): The DNNC compiles the neural network models into a DPU assembly file.

- Xilinx Vivado 2019.1 is used to inegerated DPU IP in the hardware design

- Xilinx Petalinux 2019.1 is used to build the board specific components and include required settings for DPU.

Development workflow

Applications

The EDGE AI implementation has many applications in Surveillance, Manufacturing and Retail. Some of the applications that YantraVision is working on are –

- Surface Inspection

- Defect Classification

- Fabric Inspection

- Number Plate Recognition

- Object Recognition in Retail

The next TRD we are currently working on is Video Instream Analytics which involve combining both VCU & DPU IP. If you need more information on this, write to us on sales@yantravision.com.